I had the fortune to be involved in some conceptual work while at Medtronic, which included adding AI agents to diabetes management applications. The principal was that the agent will learn your dosing and glucose patterns and, over time, get really good at predicting your insulin requirements.

For this prototype, we used Protopie. The cool thing about Protopie is that it has an extension called Protopie Connect that can facilitate communication back and forth with an external RESTful service. As it happens, AI does that, so we wired up the prototype to actually talk to it.

The first thing we did was to “gamify” the experience of getting to know the agent. This character turned out to be unloved by users, and he ended up on the cutting-room floor after usability testing, but it was a neat idea. He tracked users’ carbs, glucose, and dosing for a two-week period and then gradually faded into the background.

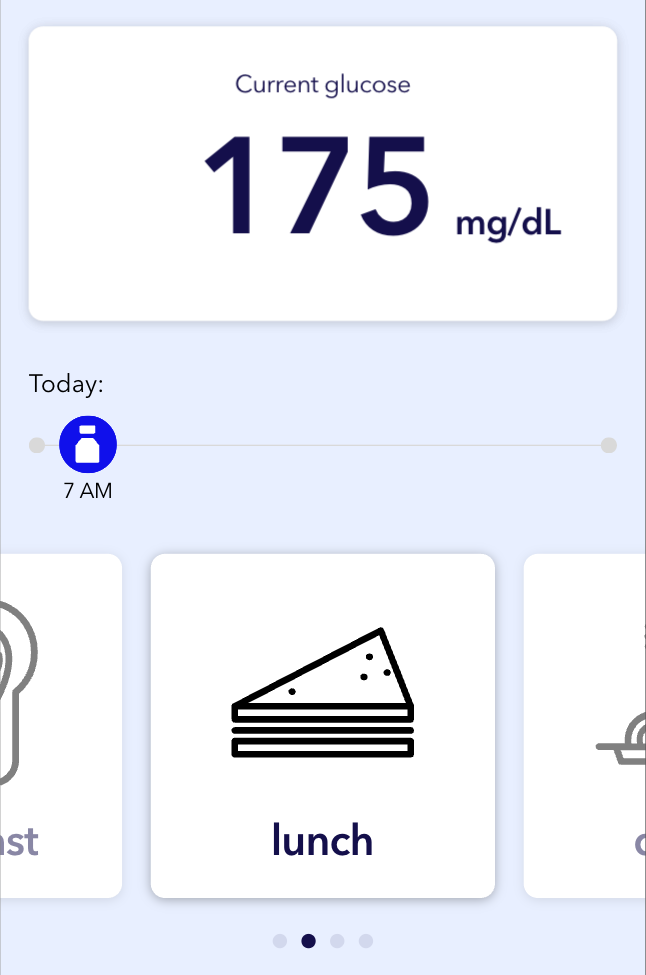

Next we experimented with a highly simplified UI that “got out of the way” of users who really don’t want to be super-involved in tracking all of their diabetes data. The agent at this point is almost entirely in the background, appearing only when its input is needed – like when it knows you get an urge for a snack. Here it is prompting the user for the lunch it knows you usually eat. The “lunch” card can be flipped over to reveal more details and get a dosage recommendation.

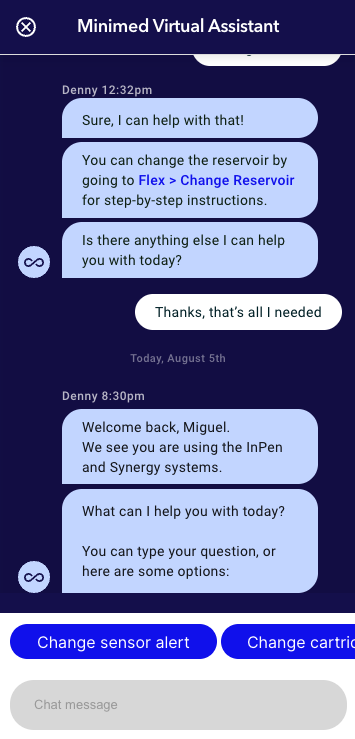

But for those times when users need additional help, a conversational assistant was available that could talk users through their questions, provide answers and product support, or automatically connect them to resources.